Unleashing the GenAI Elephant: Transform Your Organisation One Byte at a Time

As organisations set out to tackle the embedding of GenAI into their processes, products and services in search of increased productivity and competitive edge, the most valuable piece of counsel may just be to break it down into pieces and take it in stride — achievement is incremental.

“There is only one way to eat an elephant … a bite at a time.”

Vibrant Serenity: AI-Generated Elephant Art by Eva Lee

How to get started?

Introducing technological change into an organisation presents a different set of management challenges compared to the work of competent project administration.

To successfully implement GenAI into organisations involves a strategic approach that balances tried-and-proven use cases and innovative, high-risk applications.

The diagram below outlines the four high-level stages in a roadmap for GenAI implementation: strategy, proven use cases, innovative use cases and fostering a culture of innovation, adaptation, experimentation and continuous learning.

Image created by Author [Amina Crooks]

Some examples of the first proven use cases, which may pale in the future are: customer support; interactive AI browser chatbot and enterprise knowledge management.

A common pitfall for organisations embarking on this GenAI implementation journey is not involving users early, addressing their concerns, and providing ongoing support. This includes preparing the organisation, choosing opinion leaders wisely, and balancing benefits and responsibilities to foster acceptance and minimise resistance. Failure to do so can result in outcomes that do not live up to expectations and a sense of disenchantment with the new technology and its potential capabilities.

Effective GenAI implementation requires viewing it as an internal marketing effort, involving iterative planning, engaging both top management and end-users, and ensuring thorough preparation and resource allocation. Most often, organisations spin up a GenAI hub or team focused on the IT and engineering skills and experience without paying sufficient attention to bringing in talent that can assist with product strategy, business case, metrics, communication, and bridge business advisors and users with technical teams.

How to Implement GenAI?

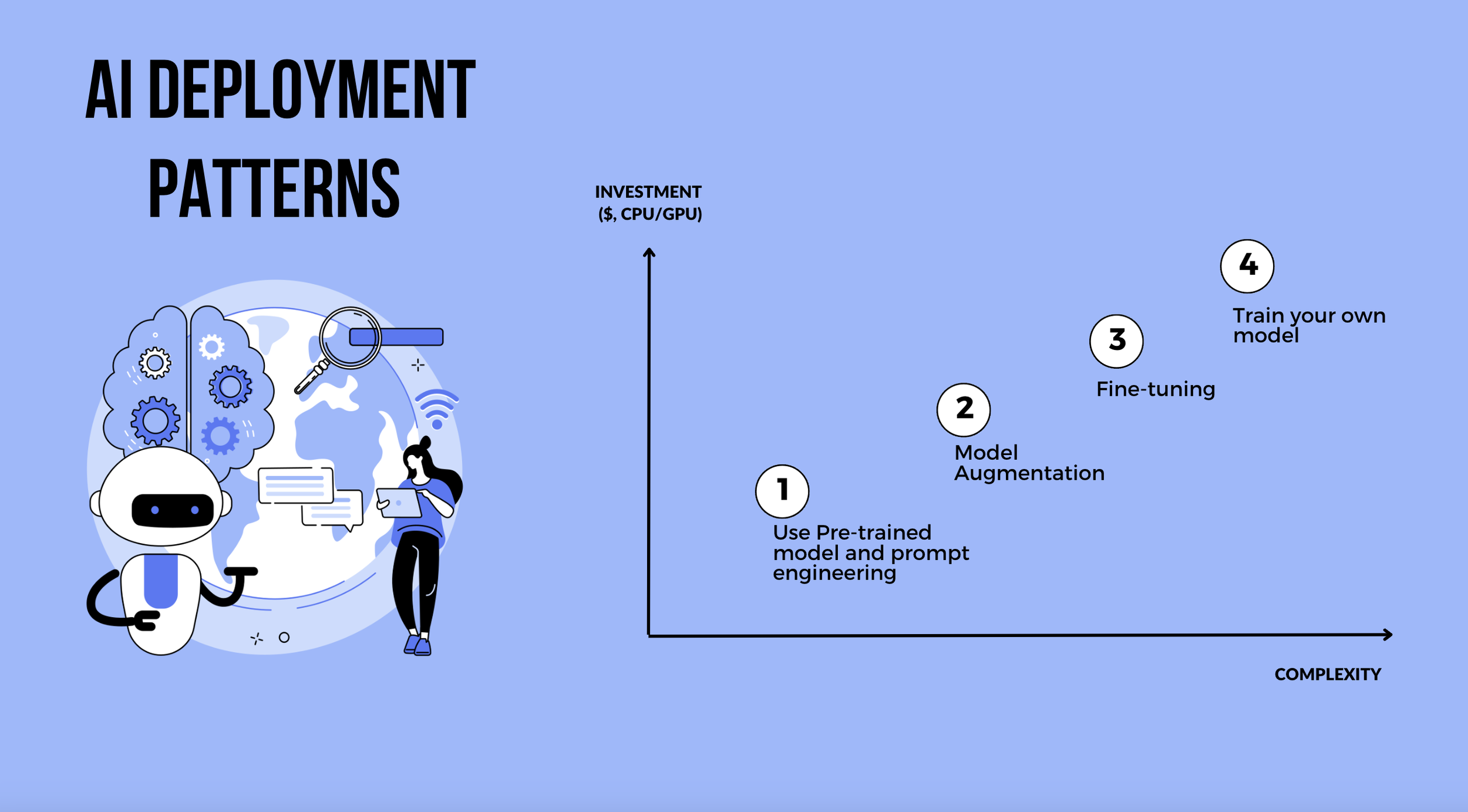

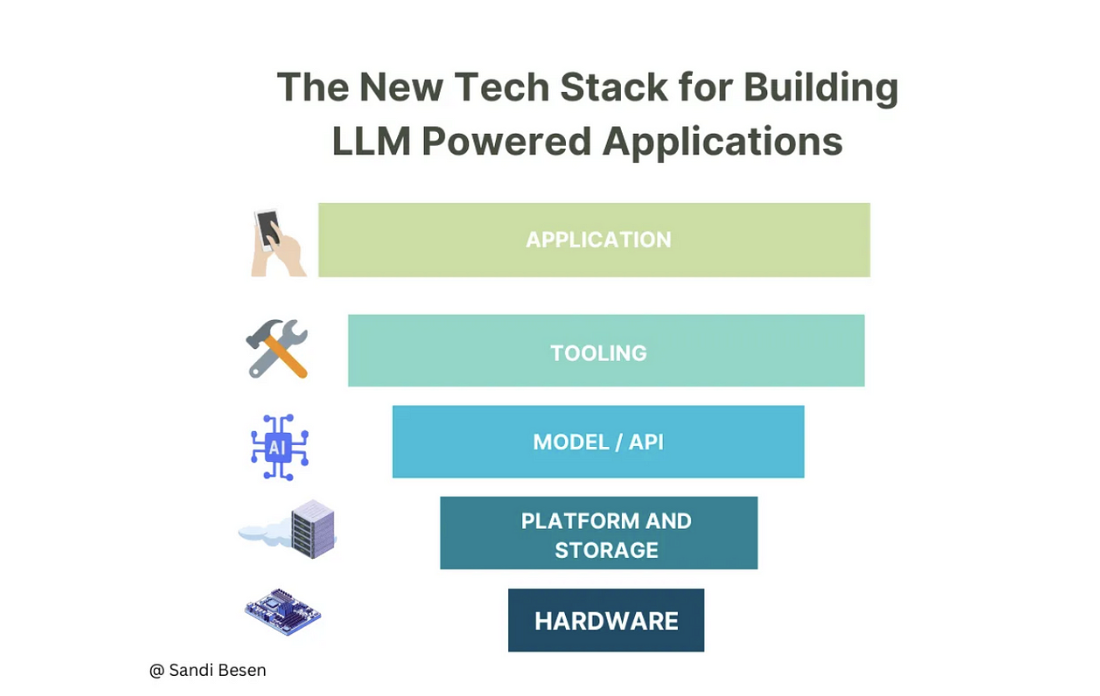

Deploying GenAI in organisations can follow various patterns depending on specific needs and resources. Right-sizing the AI investment needs consider the amount of monetary investment, computing power, infrastructure, tools, skilled workforce and build time to production.

Below are four common deployment patterns: 1. Use pre-trained model and prompt engineering; 2. Model augmentation; 3. Fine-tuning; and 4. Train your own model.

Image created by Author [Amina Crooks]

Use Pre-Trained Model and Prompt Engineering

This approach leverages existing pre-trained models, such as OpenAI’s GPT, which are already trained on vast datasets. Organisations can employ prompt engineering to fine-tune the outputs by crafting specific prompts that guide the AI to generate desired responses. This method is cost-effective, quick to implement, and ideal for tasks like text generation, summarisation, and basic customer service interactions.

2. Model Augmentation

Model augmentation involves enhancing pre-trained models with additional, domain-specific data. Model augmentation methods like Retrieval-Augmented Generation (RAGs) and APIs enhance AI models by integrating external data sources. RAGs combine retrieval mechanisms with generative models to pull relevant information from large datasets in real-time, improving response accuracy. APIs provide access to external tools and databases, enabling models to fetch and utilise up-to-date, domain-specific data. Tools such as Hugging Face’s Transformers and OpenAI’s API facilitate these augmentations, allowing for dynamic, context-aware AI applications tailored to specific organisational needs.

The model can generate more accurate and contextually appropriate outputs for specific industry applications by integrating relevant datasets. This method strikes a balance between leveraging powerful pre-trained models and customising them for specialised tasks, such as legal document analysis or medical report generation.

3. Fine-Tuning

Fine-tuning adjusts pre-trained models by training them further on a specific dataset. This allows the model to better understand and generate outputs aligned with the organisation’s specific context and requirements. Fine-tuning is particularly effective for applications requiring high accuracy and relevance, such as personalised marketing content or industry-specific chatbots. However, fine-tuning a model can be resource-intensive, require significant computational power and expertise. It may introduce overfitting to specific datasets, reducing generalisability. Additionally, it poses data privacy risks and requires ongoing maintenance and updates to remain effective.

5. Train Your Own Model

Training a GenAI model from scratch using proprietary datasets requires substantial computational resources, expertise, and time, however, it also offers the highest level of customisation and control. This approach is suitable for organisations with unique needs that cannot be met by existing models, ensuring the AI aligns perfectly with proprietary processes and data nuances. This approach is often used in cutting-edge research and development within specialised fields.

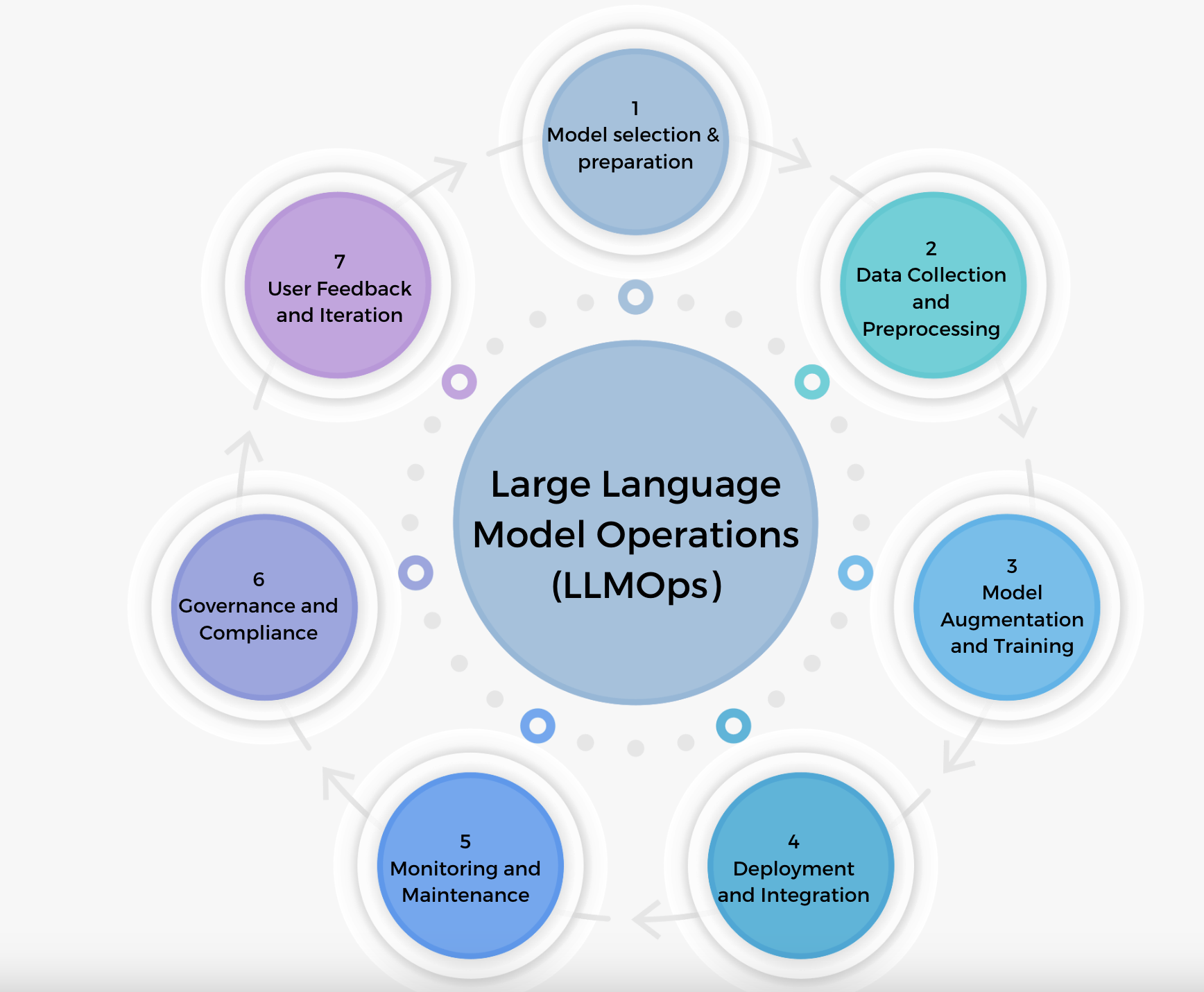

Large Language Model Operations

LLMOps (Large Language Model Operations) involves managing and optimising the lifecycle of large language models (LLMs) within an organisation.

The progression from DevOps to MLOps to now LLMOps follows many of the same engineering processes and steps to ensure a secure and highly functional pipeline and system.

Image created by Author [Amina Crooks]

Pre-trained models can be used as starting points which massively speeds up the process and ability for organisations to spin up an AI product or service. That said, there are still lots of painstaking steps and data processing activities that need to be done well to get good results.

Organisations still need to gather relevant datasets and clean and preprocess the data to ensure quality, address issues like missing values, noise, and formatting inconsistencies.

Augmenting the model requires developing a system of inputs and connections between RAGs, APIs and other interfaces, using frameworks like Langchain designed to simplify the development of LLM applications.

Deploying the trained model into the production environment requires ensuring the deployment pipeline supports scalability, reliability, and security.

Summary

Scaling GenAI across an organisation requires careful planning and strategic execution. The key to success lies in focusing on specific use cases that deliver real value, allowing ample time and resources for learning and experimentation, and paying equal attention to changes in people, organisational processes, structure, and culture.

Starting small with high-impact use cases and breaking them down into manageable stages can demonstrate incremental value and help refine the approach.

A successful GenAI implementation relies on understanding that technology is only an enabler; the real challenge is integrating it into the existing business framework and aligning it with customer needs.

By focusing initial efforts and fostering a startup mentality within teams, organisations can build processes and systems that are scalable and repeatable.

Addressing common challenges such as data democratisation, governance, and funding is crucial. Equally important is the continuous communication and involvement of all stakeholders to build trust and ensure smooth change management.

Are your current organisational processes agile enough to incorporate the rapid advancements in GenAI? How prepared is your team to handle the cultural shift that comes with integrating GenAI into everyday operations? What are the real customer problems that GenAI can solve for your business?

Thanks for reading!